Do you need an LLM for that?

LLMs are being used for everything these days, and it’s easy to see why. With relatively little upfront effort you can build extremely impressive prototypes that would have used to take weeks to build. While GPT is easily the most popular LLM right now—and GPT-4 is by the far the most capable—there are actually a wide variety of different LLMs available today, each of which come with varying tradeoffs.

I have a post in the works about choosing the right LLM for your use case which I hope to be able to publish soon. In the meantime, this post is about the topic of when you shouldn’t use LLMs to solve a problem.

While LLMs might be tremendously useful and are shaking up the field of NLP, they are also not a silver bullet for all problems. They are expensive, slow, and often fail to match state-of-the-art model performance on non-generative tasks. You can’t just write a prompt and call it a day if you want to ship a high quality experience to your production users.

Generative vs non-generative tasks

Broadly speaking, we can partition machine learning tasks into “generative” and “non-generative” buckets. Generative AI models like LLMs are, naturally, very well-suited for generative tasks.

When I built Crimson’s internal suite of recommender engines, GPT was a massive productivity multiplier because recommending an extracurricular activity to a student is fundamentally generative in nature. We don’t even really need to do all that much. At a bird’s eye view, the process looks roughly like this:

- Read key demographic information from the student’s profile, such as their intended major.

- Summarize the activities they’ve already been involved in.

- Use that context in tandem with a prompt to materialize an entirely new and original extracurricular from the ether.

- Run some self-checks using the model to verify the generated recommendation.

Written out like that it sounds complicated, but in reality all we’re doing is making a few database lookups and executing a handful of prompts. While there was a decent bit of prompt engineering, data exploration, and user experience design that went into this project, the implementation was trivialized by the existence of GPT.

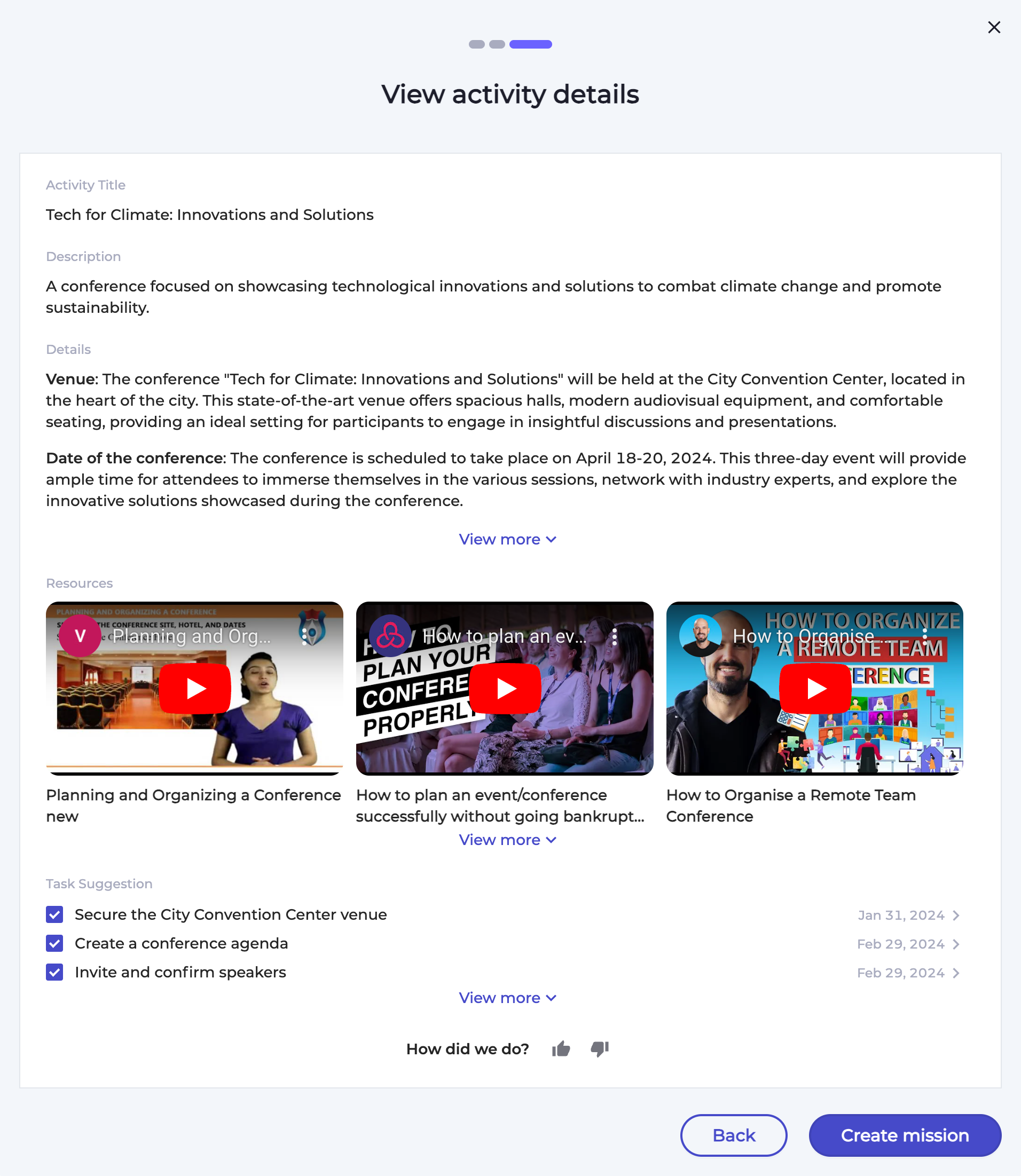

You can see an example of a generated extracurricular activity in the screenshot below. The level of detail we’re able to extract from GPT is nothing short of incredible; beneath that

It is difficult to undersell just how transformative LLMs are for this kind of use case. If you are generating new content from scratch, are working with large unstructured bodies of text, or need to be able to tweak the behavior of your system at runtime then there is simply no replacement for a solid LLM.

On the other hand, there are a wide variety of tasks which are not generative in nature. The simplest such example is classification. Every single deep learning professional has—at some point in their career—built the mandatory “cat vs dog” classification model as an exercise in using convolutional neural networks.

Determining whether an image is of a cat or a dog requires no generative step. It’s a very simple open-and-closed task with a clear objective, and therefore a clear fitness function. It doesn’t really make a whole lot of sense to call out to gpt-4-1106-vision-preview to solve this problem because we have far better tools available.

Even if GPT-4 Turbo with Vision was able to beat the state-of-the-art image classifier in terms of raw performance, it still likely wouldn’t be the best fit for the cat vs dog task. At almost any given level of performance, the supervised learning approach will deliver results cheaper and at lower latency.

Users are generally OK with the idea that their chatbot will take a few seconds to “think” and get back to them. They generally aren’t OK with their web applications regularly showing a loading spinner for 10+ seconds because it’s waiting on an upstream LLM to work its magic.

Given the well-documented hardware constraints, I don’t think the latency issue will be solved for a very long time. We’ll see improvements over time—as we did with the November GPT models—but users don‘t really care if you cut their wait time from 12s down to 6s. They clicked off at 3s.

In summary, my general thesis is that you want to use LLMs for what they are really good at. If you want to rapidly explore a problem space, materialize novel content from scratch, or work in a dynamic environment which demands runtime configuration of model tasks then LLMs are the best in class tool for your problem.

If your problem doesn’t fall under that scope—and it’s a pretty big scope!—then you might just be better off with more traditional supervised learning approaches.

In the next section we’ll look at some common machine learning tasks and discuss how LLMs perform.

Tasks you might not want to use LLMs for

Classification

When we tried building an essay type classifier for AdmitYogi Essays using only GPT, we found that we were able to classify essays with about 80% accuracy. That doesn’t seem so bad on the surface, but in reality state of the art classifiers can get far better results than that. Even a fairly simple random forest algorithm can outperform GPT in this case.

We ran into similar limitations with Winston—our customer support AI. The first step in the processing pipeline is a two-phase classification problem where we try to identify:

- Is this communication relevant? Winston listens to a shared team email inbox which receives—among other things—product updates from Mailchimp. We don’t want it trying to respond to emails like these!

- What topic is this communication about? We aren’t willing to trust Winston with certain kinds of inquiries. While we’re happy for Winston to answer questions about the curricula on offer at Crimson Global Academy, we aren’t happy with the idea of Winston servicing requests for official school letters.

We get OK results using GPT as a classification layer, but things aren’t perfect and fall through the cracks. This problem is a lot harder to build a model for compared to the AdmitYogi use case because Winston needs to be able to handle a much wider range of inputs than AdmitYogi Essays does, but it’s still possible to train a superior in-house model if we wanted to.

For classification problems, the state of the art technique is a gradient boosting model using XGBoost. XGBoost also happens to be very compute-efficient, making it a good option even in cases where you can’t afford to spend much capital on training bespoke models.

Named entity recognition (NER)

Candidly, GPT is horrible at named entity recognition. I strongly recommend you use a different tool for the job.

CoNLL-2003 is a fairly standard test dataset for evaluating NER performance. State of the art models can get up to 94.6% F1-score on this particular dataset, and top-tier performance on this task has exceeded 90% for many years at this point.

GPT-3.5 Turbo performance with only prompt engineering came in at 73.44%. When adding in-context examples based on embedding vector distances, performance went up to 90.91%.

The problem here is pretty obvious. GPT sucks if you only prompt engineer, and if you have data to do a knn search of pre-labelled sentences for inclusion in your prompt then you likely have the data you need to train a bespoke model anyway.

In the case of NER, you probably don’t even need to train a bespoke model anyway. Right out of the box, spaCy is equipped with an NER parser that scores 91.6% on the CoNLL dataset. That beats out the GPT+embeddings, and you’re also going to be able to run spaCy for a far lower price than what you would end up spending on OpenAI’s APIs.

Simple sequence-to-sequence transforms

This one is a bit of a gimme, because named entity recognition is itself fundamentally a sequence-to-sequence problem. Formally, we can define NER as taking in an input sequence of tokens and then outputting a sequence of class predictions.

The truth, though, is that GPT and other LLMs are actually pretty good at a number of different sequence-to-sequence problems. The older text completion (text-davinci-*) models were reasonably competitive at machine translation, and GPT-4 is comparable to commercial translation products. These are very good results for a general-purpose language model.

LLMs are a somewhat poor fit for “simple” sequence-to-sequence problems, however, because LLMs are pretty good tools for producing smaller models that can replace the LLM.

Let’s say you are building a meeting summarizer tool. The average human is not particularly good at speaking clearly, so the transcripts you have to work with are full of disfluencies. Virtually every bit of the transcript is riddled with stuttering, filler words, repaired utterances, and so on.

In order to improve the signal to noise ratio of your transcripts you decide to patch these disfluencies prior to performing your actual summarization work. GPT and other LLMs like Bedrock actually do a really good job here, but there are a couple of problems with using them:

- LLMs are quite expensive and slow for this simple use case.

- Because LLMs are good at this task and the task is relatively simple, you can actually use an LLM to synthesize all the training data you need to produce your own model.

That second point is killer. According to my benchmarks, the November GPT-3.5 Turbo model can output ~77.5 tokens/second per request you throw at it. Request limits for the GPT-3.5 Turbo models are so high that you can process ~900,000 tokens per minute without too much trouble.

So: cut that figure in half to account for your input tokens, and then pessimistically assume you’ll lose 20% of your tokens to prompt instructions and cruft that GPT outputs alongside the synthetic data you are after. If you already have examples of disfluent text—i.e. you already have meeting transcripts—then you can synthesize ~270,000 words of corrected text per minute.

If you start completely from scratch, then you can generate ~135,000 words per minute of usable disfluent:fluent sentence pairs. That’s a ton of data in very short order. Run it for a day and you have hundreds of millions of words worth of training data—a substantially larger corpus than most languages have.

Assuming a roughly 1:1 input:output token ratio, your blended cost would be $0.0015 per 1k tokens. At 900,000 tokens per minute, you’d spend $1,944 to generate all of that training data. Even in absolute terms that’s a trivial cost, and relative to what such a dataset used to cost it might as well be zero.

Take any base sequence-to-sequence model (such as t5), fine-tune it with that data, and you’ll end up with something that matches GPT’s performance without the drawbacks of using OpenAI’s API.

The terms of service rules around using OpenAI model output to produce “competing” models doesn’t apply here, because your internal disfluency correction model isn’t in competition with OpenAI’s API business. This is why startups like OpenPipe are able to run without running afoul of OpenAI’s lawyers.

Build vs. buy: LLM edition

Something that has been lost in this hype cycle is that the point of in-context learning isn’t to match specialized state of the art models in all use cases; it’s simply a very efficient tradeoff. LLMs are able to generalize to new tasks reasonably well with remarkably few samples, which makes the prototyping stage significantly faster than it used to be. Where you once needed to spend days or weeks gathering enough data to train a useful ML model, you can now prompt GPT and get surprisingly good outputs nearly instantly.

The flip side of this is that your ongoing costs are likely going to be much higher when using LLM APIs, while also getting worse performance. A small tailor-made model for your application might be difficult to produce in the first place, but it is also radically more compute-efficient than pulling in a 175B parameter LLM such as GPT-3.5 Turbo.

That custom model will also likely get far better latency, as LLMs are still frustratingly slow—even with recent speedups from OpenAI. While you can train custom models with lots of parameters, you usually don't need to. I’ve trained a number of useful neural networks in my time, and they’ve never gone over a couple hundred million parameters. You simply don’t need massive models if you are solving a constrained problem, and—all else being equal—you expect the smaller model to yield better inference speeds.

So what LLMs and their ability to learn in-context really buys you is reduced upfront overhead. You are getting to market much faster at the cost of increased ongoing cost. This tradeoff makes sense in a lot of cases, but you need to think about how long your product is likely to last. Over an infinite time period the upfront cost of training a model gets amortized down to nothing, and operational expenses start to dominate.

So do you need an LLM for your use case? For generative tasks I would say so; those are, after all, the use case that these models are intended for. For more traditional machine learning workloads such as classificaiton, however, I think the value proposition of LLMs are a lot less clear cut. They might make sense early on in a product’s lifecycle, but you might want to consider moving to supervised approaches sooner rather than later in order to get maximum performance at minimum cost.