The OpenAI dashboard is a lesson in product management

On December 27, Crimson Education’s usage of GPT-4 doubled overnight and remained elevated up until early January. We see fluctuations like this all the time as usage of our applications tends to correlate pretty well with spend on services like AWS and OpenAI, so ordinarily we wouldn’t think much of it. In this particular case, however, the spike occurred over a period of time when most people weren’t using our apps due to being on holiday. Very unusual, and worth digging in to.

The problem was that OpenAI didn’t let you break down usage by individual API key. You were only able to report usage by users in your organization, and all of us were using API keys created from the same shared devs@crimsoneducation.org account. Oops.

While it’s easy for us as an engineering team to track OpenAI usage by product or feature, we aren’t the only people using OpenAI’s models. Other departments have their own API keys which get plugged in to tools such as Team-GPT, and we obviously have no visibility into usage accrued by these tools. I started work on breaking up our API keys into different user accounts, and drafted a long blog post reflecting on why OpenAI might not have built this functionality yet. Of course, as I was about to publish that post today, I noticed that in the intervening days they’ve gone ahead and actually shipped the thing.

All API keys created since December 20 are automatically enabled for per-key usage tracking, and keys created prior to that cutoff can have usage tracking enabled by going to the dashboard’s “API keys” section and hitting an “enable” button on each key you want to track.

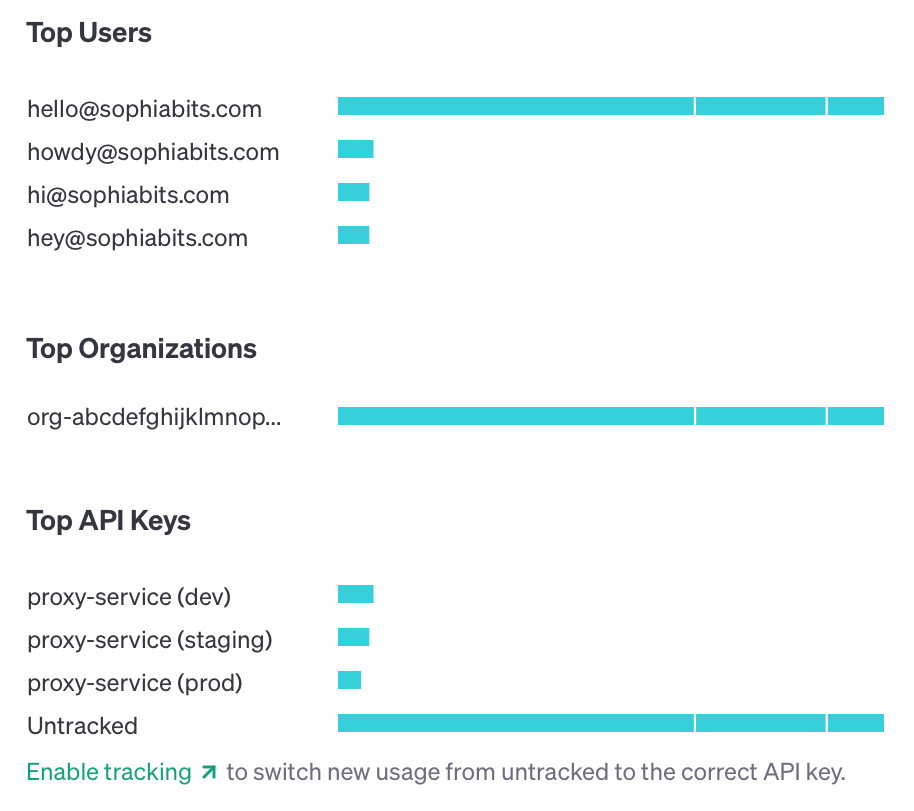

There’s a new “Top API Keys” section right below the “Top Organizations” section which gives you the actual itemized breakdown. Unfortunately, it seems that the dashboard is still limited to only showing requests per key, user, or organization.

Hopefully down the line we’ll be able to see token usage here as well, as tokens are a lot more important for cost analysis than the raw number of requests.

This was low priority for OpenAI

Customers have been requesting per-key usage tracking for quite a while now. When an individual API request to OpenAI can cost as much as it does, it seems obvious that customers would want a high degree of visibility into their API usage.

In some cases, the feedback around this missing capability has gotten quite heated:

It is ridiculous and anti-enterprise to require unmanaged accounts that are validated not against your organization but by a phone number and their own credit card; that each phone number can only be used twice and account deletion renders the phone number unusable. The whole system should be trashed and re-thought.

— _j

There’s a really good learning opportunity here for anyone who’s thinking of founding a startup, or who is working in a product team. Think about the context here. We have a feature request that is…

- entirely reasonable (some would even say that it’s table-stakes for an API with pricing like OpenAI’s)

- trivial to implement

- requested by a lot of customers

A less disciplined product team would have implemented this feature a long time ago. I’ve personally been in a lot of product meetings where some or all of these reasons have been used to justify adding a feature to the roadmap. The difficult pill to swallow for a lot of people is that none of these are particularly good reasons for scheduling in the work.

The ultimate point of building any new feature is to generate some kind of business return. The fact that a bunch of existing customers have requested a feature is orthogonal to whether doing that work will generate a return. It’s entirely possible for something to be widely-requested and have no bearing on churn rate or conversion rate.

In the case of OpenAI usage tracking, I’m not aware of a single person or company that closed their account or refused to use OpenAI’s services in the first place because of this missing capability. If that’s the case more broadly, then why would OpenAI ever decide to prioritize this feature?

It actually doesn’t matter how easy implementing this feature would be. The moment you decide to invest a week or two into building this feature is the moment you have chosen to delay other—potentially more valuable—feature releases. If you look at what OpenAI have shipped over the course of the past year, it’s clear that they haven’t been hurting for product ideas.

Improving things like rate limits, system stability, and releasing new APIs like assistants all seem significantly more valuable than improved usage reporting. OpenAI’s big target demographic right now are developers; not finance teams. While developers do care about cost optimization to some extent, they’re much more excited by the prospect of building cool things.

And from a business perspective you might find the limited cost attribution abilities frustrating, but the reality is that there is no other LLM available today which beats out the GPT-4 line in terms of performance. If your use case demands best-in-class machine intelligence and you can’t afford to invest in an inhouse team of ML experts—and a lot of businesses fall into this bucket—then you have no choice but to suck it up and use OpenAI.

If you find yourself building lots of things simply because they’ve been requested by users, then you either lack product-market fit or don’t understand why your business is successful. The “build better products” component of “advertising is for losers, building better products is for winners” is hard to do if you don’t understand why your product is good in the first place.

Conclusion

So at long last OpenAI have shipped per-key usage tracking. This handles one particular limitation of their platform, but other nice-to-have features such as per-key spend limits or IP allowlists are still missing. You also still can’t report usage based on the user parameter, so if you want to track usage by end-user of your application you still need to use something like LLM Report.

Lots of limitations here, but per-key usage tracking is likely the biggest request from API users. Knocking that one out is a pretty big win. At Crimson we were creating all of our API keys under a shared engineering@company.com OpenAI account and I had just finished splitting those keys out into separate accounts so I could get slightly more granular usage tracking. It’s nice nobody else needs to do that now.

But there’s still a lesson about product priorities to be learned from this. This feature is coming out pretty late in the game, and it’s not as though OpenAI are completely starved for resources. The late release of this feature is a great example of how “obvious quick wins” don’t necessarily need to be shipped the instant someone sees them.

You want to be deliberate about the work you choose to do, and not get swept away every time you hear something that sounds nice. The best product teams are in control of their destiny, and have a very clear understanding of the need they are fulfilling.

OpenAI’s product team understands that their business isn’t in making finance teams happy. Their business is in pushing for AGI and making high quality models available to everyday developers.

Why prioritize resources for a feature that doesn’t line up with those goals?