Is it time to stop requesting YAML from GPT?

The superpower of GPT and other LLMs is their ability to rapidly learn in-context off highly unstructured input text. You can immediately tell when you’re working inside a codebase which leverages LLMs extensively because of how much English language there ends up being intertwined with code.

I’ve lost the tweet at this point, but I remember reading all the way back in ~March of this year someone remarking that they had noticed themselves writing about as much English inside their .py files as they did Python. That’s pretty remarkable.

While LLMs might be good at dealing with unstructured text, our code hasn’t caught up yet. If you’re building something other than a chat app you’re inevitably going to run headfirst into a situation where this seam becomes a problem and you need to pull out structured data from your LLM.

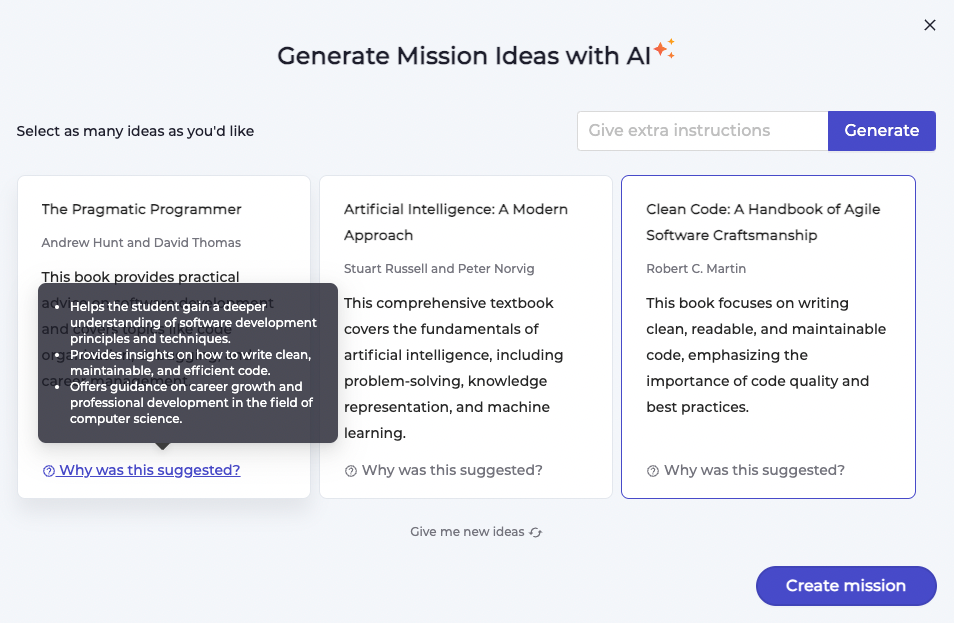

Consider the following UI we built recently at Crimson Education, which presents book recommendations to end users of the Crimson App:

We have three cards, and each card has distinct fields such as a title, description, author, and reasons why it was recommended. Under the hood there’s even more data available, such as the ISBN of each book. Parsing this data out reliably from freeform text generated by GPT would simply be impossible.

The way you get around this is by asking the model to output its response in a more machine-readable format such as JSON. Simply include a description of the schema you’re after, and usually you’ll get what you want:

In practice, however, there are a lot of edge cases to work through. Sometimes GPT will give you back the JSON object directly (as seen in the example!), and other times you’ll get it back code-fenced1. Sometimes you’ll get a valid and parseable JSON response, but other times you’ll get back something malformed.

Sometimes GPT will completely ignore your request for JSON output and give you back freeform text anyway!

Since the release of function calling in June 2023 it’s been possible to get stronger guarantees about your JSON output, but it’s a bit clunkier than “standard” prompt engineering and I’ve noticed GPT calling functions with malformed arguments, anyway, so the problem isn’t entirely solved by function calling.

Yesterday OpenAI announced “JSON mode” which guarantees the model will return a valid JSON object. You can opt in to it by setting a new response_format parameter when creating a chat completion using a supported model (gpt-3.5-turbo-1106 is a valid option at the time of writing). It looks like this:

const result = await openai.chat.completions.create({ model: 'gpt-3.5-turbo-1106', response_format: { type: 'json_object' }, messages: [{ role: 'user', content: 'What is 1+1? Respond in the following JSON format: { result: number }', }],}); // Note: You still get a string back!console.log(JSON.parse(result.choices[0].message.content));

It’s important to note that at least one of your messages needs to contain the word “JSON” in some form if you opt in to JSON mode. If you don’t fulfill this prerequisite then OpenAI will bail out early and respond with a 400.

A quick note here that it would have been trivially easy for OpenAI to type the response_format field as a plain string enum instead of as a discriminated union. This would have been a huge mistake! This way makes it simple for them to support passing options for the response format in an elegant, type-safe, and backwards-compatible manner. Nesting is always a good idea when designing your APIs as it affords maximum flexibility.

There are a few obvious benefits to this new mode which make it a pretty compelling option.

Advantages of JSON mode

Fewer input tokens. Almost all of my prompts up until now have included instructions along the lines of “Return the final answer ONLY with no further explanation”, but this addition is pointless when the model’s output is guaranteed to be valid JSON. Fewer boilerplate tokens means you have more room to work with, and the reduction in prompt tokens also saves you some cost.

Easier response handling. You may or may not have previously received code fenced JSON from GPT. You’d need to run a regex to try and find a code fence, and then fall back to parsing the entire response. Now that the response is guaranteed to be JSON, you can simply JSON.parse the response blindly.

Less reprompts. Historically, GPT’s ability to output JSON has been unreliable. JSON is an extremely fragile format where a single misplaced quote or comma can completely break parsing, and in my experience you get back slightly malformed JSON fairly often. Any syntax error you can’t fix via code necessitates a reprompt, which pushes up both latency and costs. With JSON mode, you no longer need to worry about this class of error.

Unfortunately, these benefits don’t come in a vacuum and there are some very real (and significant) downsides to using the new JSON response format which are less immediately obvious.

JSON mode tradeoffs

Limited chain-of-thought prompting

The first major limitation of the JSON response format is—ironically—that you only get back plain old JSON. You can’t emit JSONC or JSON5, which means you can’t ask GPT to perform chain-of-thought prompting inside a comment.

Here’s an example (borrowed from Elya Livshitz’ post comparing YAML and JSON as LLM output formats) of GPT failing to compute the answer to a math problem in one shot:

When using the text output format we have many strategies available to fix this issue:

- Ask for JSON5 output, and tell the model to think step-by-step inside comments.

- Ask for YAML output, and tell the model to think step-by-step inside comments.

- Have the model think step-by-step, emit a delimiter character (e.g.

<<break>>), and then return the final JSON output. - Include a special property in your output schema for storing the model’s thoughts.

- Split up the work into two separate prompts. The first one asks the model to “think” about the problem, and the second one asks the model to turn its thoughts into the final solution

Option #3 has lately been my preferred solution to this problem because it completely avoids the issue of the model messing up when it needs to “think” across multiple lines. Everything prior to the delimiter character is distinct from the final output object, which makes handling the response really easy.

The thoughts being plain text and not comments which get stripped out by a parser also makes storing the thoughts somewhere for use down the line trivial, too. This is really handy for debugging purposes.

Unfortunately, your only real option when using the new JSON output format is #4. It works well enough, but it does mean that the model’s reasoning ends up intertwined with your response object:

You may not want to return the model’s reasoning to your API consumer, which means you have an extra chore on your hands in the form of tidying up the object before sending it back. The other options all resulted in a very clean separation between reasoning and the result, so this was never a problem.

Note that it’s completely legitimate (although not recommended) for a JSON object to contain duplicate keys. This means that—just like if you were asking the LLM to “think” inside comments—you can have multiple bits of reasoning when using special properties. It’s fine to have multiple _steps properties inside your requested JSON schema.

No minification

While you do save a little bit on input tokens by avoiding boilerplate, your spend on output tokens (the more expensive line item!) will increase. At the time of writing, it doesn’t seem like the JSON response format supports generating minified output which means you end up “wasting” tokens on whitespace.

Consider the following JSON object:

{ "A": 900040}

In its unminified form this object tokenizes to 10 tokens. Minification yields a 40% reduction down to only 6 tokens. This is an extreme example which showcases a “best case” scenario for minification. Minification is largely an exercise in saving whitespace tokens, and here whitespace is a significant proportion of the object owing to my very short property name and value.

A more realistic example such as the following book recommendation yields a 21% token reduction—from 33 to 26. Not quite 40%, but still a significant reduction.

{ "title": "The Great Gatsby", "author": "F. Scott Fitzgerald", "isbn": "9780593311844"}

Does this mean you’ll end up spending more overall on GPT? I’m not entirely sure. All of the projects I’ve worked on recently have been using YAML as our output format so I don’t have solid metrics on how often GPT emits invalid JSON.

While you might end up with 25% more output tokens, you do completely avoid one failure mode where you would have otherwise needed to reprompt entirely. Making one slightly more expensive request is going to be better than making two slightly cheaper requests any day.

Of course, the issue is that YAML really is just a much better output format for GPT and other LLMs. The book recommendation displayed earlier is only 26 tokens when output as YAML which matches the minified JSON, and it can even be brought all the way down to only 22 tokens if you’re okay with omitting quotes. You’ll need to deal with isbn parsing as a number in this case, but that’s pretty simple to do.

No top-level arrays

This exacerbates the increased token usage highlighted in the previous section. In the case of Crimson App’s book recommender we want to produce three different recommendations, and for reasons of latency and quality we want to retrieve these recommendations with a single prompt. This pattern of requesting multiple outputs for a single prompt is very common.

As our book recommender is built on top of the existing text response format, we’re free to request a list of YAML objects as output. This gives us a nice, compact response which minimizes output token consumption.

With JSON mode, however, the best you can do is envelope the array inside an object:

Remember the contrived example where I achieved a 40% reduction in tokens through minifying the JSON? In this more realistic example, it’s actually possible to reduce tokens by 42% (from 76 to 44) through removal of the books envelope and then minifying the resulting array. That’s a pretty significant difference! From a different perspective, we’ve increased our generated tokens (and their cost) by 73%.

Should you use JSON mode?

While JSON mode has clear deficits today, I think it’s absolutely worth using going forward. Existing prompts that are battle-tested and working in production can be left alone for now.

JSON mode is a lot less token efficient than an ideally engineered text mode prompt, but getting a guarantee from OpenAI that the response is valid JSON is huge. Reprompting is an expensive waste of time that has horrible consequences for your app’s user experience, and up until now it has been impossible to guarantee that GPT will always respond with structured data to a prompt.

And structured data that will successfully parse, at that! YAML has proven to be a far more resilient output format in my work, but there were still edge cases. It’s still possible that the response won’t match your expected schema[^3 while I’ve been putting JSON mode through its paces, I haven’t noticed any incorrect response schemas. I can’t say conclusively that this failure mode has been fixed as part of the JSON mode feature, however.]—but then that’s always been the case.

So while you will spend more on individual prompts, I suspect that you will need to send out fewer prompts overall. This is a particular boon for users of GPT-4, as while rate limits have improved a lot since I first wrote about them they still cap out pretty early compared to other API providers you’ll be operating with.

In any event, most of JSON mode’s limitations have to do with token inefficiency and I imagine these are going to be easy problems for OpenAI to solve. Outputting minified JSON instead of pretty JSON, for instance, should in theory be possible with a simple update of the training data being used. Outputting top-level arrays might be harder, if only because—I think—there are fewer examples of top-level JSON arrays compared to JSON objects floating around on the Internet.

The limitations around chain-of-thought prompting are a bit frustrating, but adding synthetic keys to your output schema for the model to “think” inside isn’t the end of the world. If you stick to a convention of always indicating these keys with an underscore (or other character) then it will be possible to automate the process of stripping these fields from API responses to minimize developer friction.

Should you use JSON mode? Yes. It’s a big step forward for GPT.

- This variation actually explains most of the “regression” in ChatGPT’s code generation performance observed by this arXiv paper. The authors simply eval’d GPT’s response as-is without trying to handle edge cases like Markdown code fences.↩