Low and steady write rates can be misleading

A common mistake in system design interviews is to underestimate a low but steady write rate. Many candidates quickly dismiss a write rate of 1 request per second as insignificant, but this isn't always the case—especially in a startup environment where growth is measured week-over-week.

Small write volumes can accumulate significantly over time and cause all sorts of downstream problems. During a major Postgres version upgrade, Retool found that half of their 4 TB database was taken up by audit events—many of which they didn’t need to be readily accessible due to their age. At 1 write per second, you will accumulate 31.5m rows over the course of the year. This isn’t an overwhelming number of rows—depending on the details of what you’re doing, this is most likely fine—but it’s a larger number than most people expect.

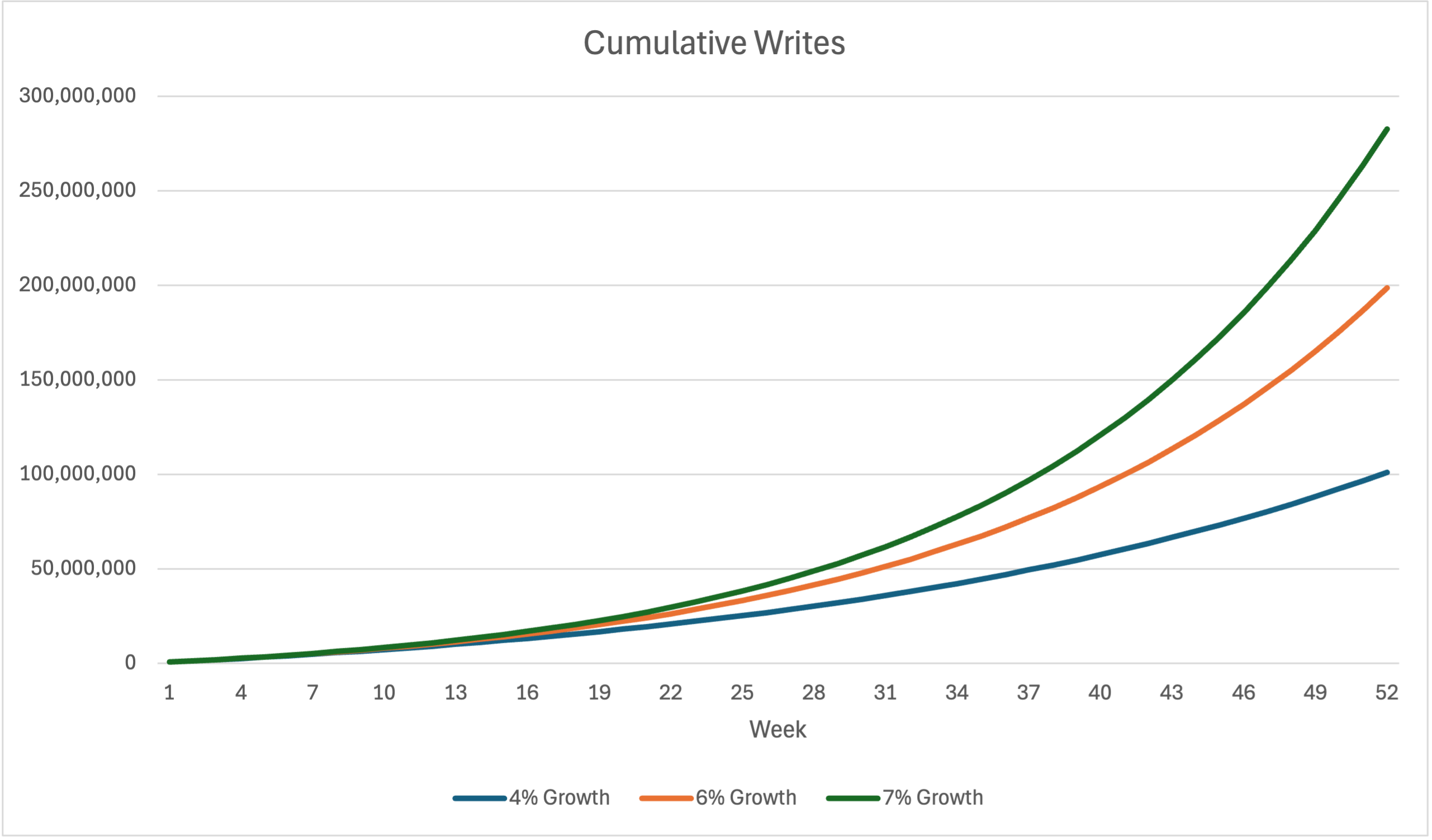

That 31.5m figure assumes your company sees zero growth over the year, which is unlikely to be true. A successful startup will grow week-over-week, and weekly growth compounded over the course of a year can balloon writes significantly. The graph below shows the cumulative number of rows written during year 1 under three different weekly growth rates:

At the high end we’re looking at a bit under 300 million rows written. By the end of the year we’d be writing 2.9 million rows per day, which gives us an annual run rate of 1.05 billion rows per year if growth were to completely halt. This is a very large number of rows; you almost certainly will need to plan out scaling strategies for this case. Broadly speaking the most common use case for this kind of low-but-steady append-only write volume are audit logs, which you often don’t need to hold on to forever. A good data retention policy may be all you need.

The system design interview isn’t purely about delivering a working system—it’s also an opportunity for you to demonstrate knowledge of how the system might evolve and break in future. A great candidate with relevant experience should be able to talk about typical scaling pathways; for example, remaking that databases tend to become the bottleneck of the typical startup’s infrastructure before the API servers do.

Some scaling problems can be dealt with in a reactive manner. It’s relatively easy to throw a Redis cluster into your system, so you might decide to skip a caching layer in the early days of a startup and come back to it later when scaling reads actually becomes a problem for you. A great many number of scaling decisions fall into this category, which is why startups can “get away” with accumulating lots of early technical debt.

But then there are scaling problems which are best dealt with proactively. It’s a predictably bad idea to run your MVP’s database on a single EC2 node you manage yourself, and you should have read replicas from day one. Low but steady write traffic can potentially fall into this category depending on the nature of the system and its growth rate.

A really great way to distinguish yourself from other candidates at the system design stage is to really think through write traffic.

- Consider the consistency and durability requirements. If you’re interviewing at a FinTech and storing audit logs, then there will be stringent regulatory requirements your system must meet. Strong consistency and/or high durability requirements can have big impacts on how you think about writes.

- Think about the access patterns. Are there joins involved? Do you need to support flexible queries, and won’t always be able to rely on index scans? All of these should nudge you towards a more sophisticated design, like sharding.

- Ask about the expected weekly growth rate. A nominal (0-1%) rate makes it more likely that you can brute force through possible scaling challenges, whereas higher rates might necessitate a more scalable design.